I did this in several steps:

- create an md5 dump of the files system in question. For this I used my favourite command line tool `md5deep`,

- sort the file based on file size then md5 hash (sorted from largest to smallest), and

- remove entries that are not duplicate entries.

Although this could be completed in one step, I have broken it into several steps for my own clarity.

generate hashes using md5deep

simple enough:

md5deep -rze ~/documents/ > dump.md5.1 -r : recurse subdirectories -z : includes file size -e : displays progress on each file

recreate the hashes without filesize (optional)

I wanted the hashes in two forms, one with file sizes, and one without. I first created the hash set with file sizes and then parsed that to remove it from the front of the line. This is a lot quicker than hashing all the files twice.

sed to the rescue

sed -e 's/^[ ]*[0-9]* //' dump.md5.1 > dump.md5.2

simply enough, I know the line commences with any number of spaces (including zero spaces), then a number that is the file size. This can only be a set of digits, and finally two spaces (unseen between the final asterisk and double slash).

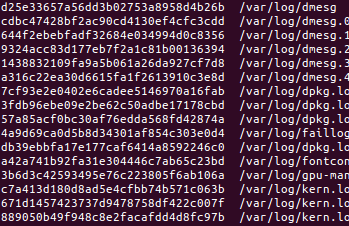

dav3@dubunt:~$ tail temp.md5 2111 4ceaa380370a537da5c0e36f932df537 /var/log/syslog.7.gz 86400 4efaef324ae83db0549e199a3685cdc3 /var/log/wtmp 346938 9a254850fcd98d94eb991f591eff4772 /var/log/udev 109440 8353823cde9dcfd415e2c639e2db4924 /var/log/wtmp.1 56641 1e0c5a881f6e9bd0f8a8c60461051368 /var/log/Xorg.0.log 9030 5ec0e371a8841a9f9e46cbe0ec128c36 /var/log/Xorg.1.log 167150 ad1d9c65dd2edd089ba3e8388f68f1f9 /var/log/Xorg.0.log.old 41438 416bf352c4d00d8912c827bdbc5e9c09 /var/log/Xorg.1.log.old 6468 06a6770c50edd13140279fec4eacd805 /var/log/Xorg.failsafe.log 6468 3659d35af858878602b60ed053f32df2 /var/log/Xorg.failsafe.log.old dav3@dubunt:~$ sed -e 's/^[ ]*[0-9]* //' < temp.md5 > temp.md5.2 madivad@garage:~$ tail temp.md5.2 4ceaa380370a537da5c0e36f932df537 /var/log/syslog.7.gz 4efaef324ae83db0549e199a3685cdc3 /var/log/wtmp 9a254850fcd98d94eb991f591eff4772 /var/log/udev 8353823cde9dcfd415e2c639e2db4924 /var/log/wtmp.1 1e0c5a881f6e9bd0f8a8c60461051368 /var/log/Xorg.0.log 5ec0e371a8841a9f9e46cbe0ec128c36 /var/log/Xorg.1.log ad1d9c65dd2edd089ba3e8388f68f1f9 /var/log/Xorg.0.log.old 416bf352c4d00d8912c827bdbc5e9c09 /var/log/Xorg.1.log.old 06a6770c50edd13140279fec4eacd805 /var/log/Xorg.failsafe.log 3659d35af858878602b60ed053f32df2 /var/log/Xorg.failsafe.log.old

sort largest to smallest

we need to sort the file based on two keys. Firstly, the file size then the hash.

sort -n -r -k1,1 -k2,2 < temp.md5 > temp.md5.3

We COULD just sort by hash and then by size, but it’s possible to get the same file hash for two files that are of different size and we are distinctly after a list based on largest files first grouped by hashes (because it likely we’re going to have files with the same file size but they will be different files. Which leads us to…

remove lines that are not duplicated elsewhere

We can now parse this list through unique:

uniq -w 40 -d -c < temp.md5.3 > temp.md5.4